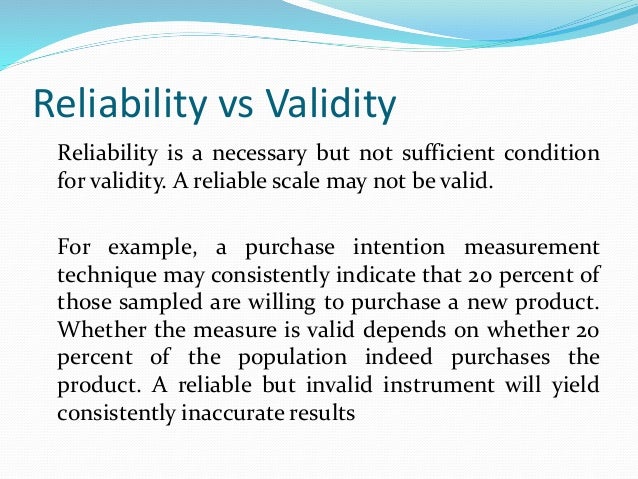

2 For example, a score of high self-efficacy related to performing a task should predict the likelihood a participant completing the task. Predictive validity-means that the instrument should have high correlations with future criterions. In this case, for example, there should be a low correlation between an instrument that measures motivation and one that measures self-efficacy. Criterion validity is measured in three ways:Ĭonvergent validity-shows that an instrument is highly correlated with instruments measuring similar variables.ĭivergent validity-shows that an instrument is poorly correlated to instruments that measure different variables.

Correlations can be conducted to determine the extent to which the different instruments measure the same variable. A criterion is any other instrument that measures the same variable. The final measure of validity is criterion validity. For example, when an instrument measures anxiety, one would expect to see that participants who score high on the instrument for anxiety also demonstrate symptoms of anxiety in their day-to-day lives. Theory evidence-this is evident when behaviour is similar to theoretical propositions of the construct measured in the instrument. Although if there are no similar instruments available this will not be possible to do. Homogeneity-meaning that the instrument measures one construct.Ĭonvergence-this occurs when the instrument measures concepts similar to that of other instruments. There are three types of evidence that can be used to demonstrate a research instrument has construct validity: For example, if a person has a high score on a survey that measures anxiety, does this person truly have a high degree of anxiety? In another example, a test of knowledge of medications that requires dosage calculations may instead be testing maths knowledge. A subset of content validity is face validity, where experts are asked their opinion about whether an instrument measures the concept intended.Ĭonstruct validity refers to whether you can draw inferences about test scores related to the concept being studied. In other words, does the instrument cover the entire domain related to the variable, or construct it was designed to measure? In an undergraduate nursing course with instruction about public health, an examination with content validity would cover all the content in the course with greater emphasis on the topics that had received greater coverage or more depth. This category looks at whether the instrument adequately covers all the content that it should with respect to the variable.

0 kommentar(er)

0 kommentar(er)